[ Home :

Programs

|

libCVD

|

Hardware hacks

|

Publications

|

Teaching

|

TooN

|

Research

]

Videos

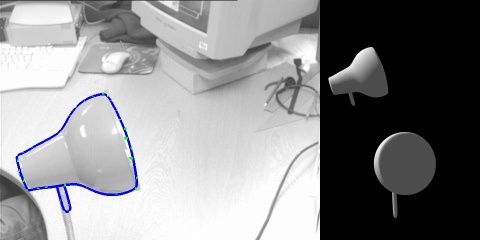

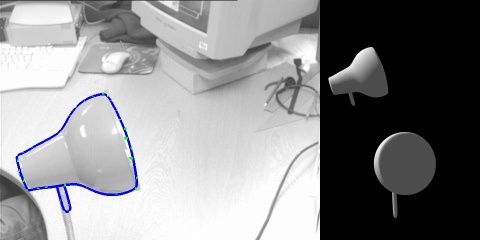

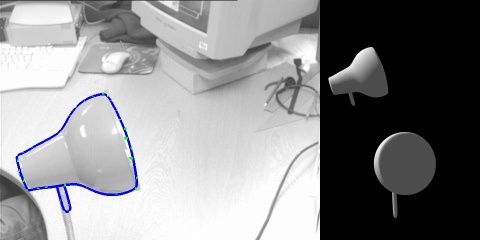

This video shows a desk lamp being tracked by following its apparent contour.

The lamp is a good illustration of this tracking technique, since this is about

the only useful feature of the lamp. The left hand frame shows the tracker in

operation: the green lines are the edge-normal searches, and the blue line is

placed where the tracker thinks the apparent contour should be. The top left

frame shows a rendered version of the same view. The bottom left frame is a

novel synthetic view from in front of the lamp.

See Rapid rendering of apparent contours of implicit surfaces for realtime tracking

Tracking a desk lamp [MPEG2] [2.8MB]

This video shows the inside of my lab being tracked using a technique which robustly

combines tracking information from a point based tracker and an edge based tracker.

The strengths of the two systems can be seen in this video; large, unpredictable

motions are tracked by the point baed tracker, and accumulated errors are removed

by the edge based tracker.

See Fusing points and lines for high performance tracking.

Tracking my lab [MPEG2] [7.0MB]

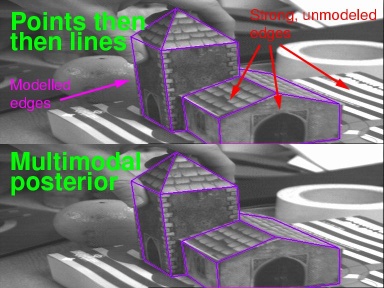

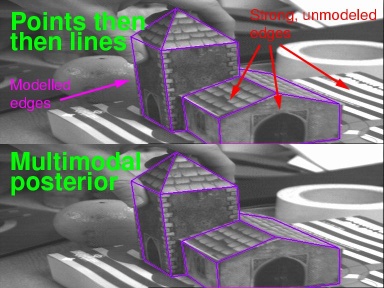

This video illustrates the advantage of using a non-trivial method to combine the

results from a point based and edge based tracker. If the point tracker is simply used

to initialize the starting position of the edge tracker, then the overall system breaks on

this sequence. If the multimodal nature of the posterior is taken in to account, then the

edge tracker can effectively be tested for failure. Once failures can be detected, then they

will not cause tracking to fail.

See Fusing points and lines for high performance tracking.

Multimodal tracking [MPEG2] [1.7MB]

Automated label placement

Videos of real-time automated label placement in an unstructured, cluttered environment. The videos illustrate object specific labels, object specific labels with directional placement constraints and a screen stabilized label.

See: Real-time Video Annotations for Augmented Reality.

Updated July 14th 2011, 11:54